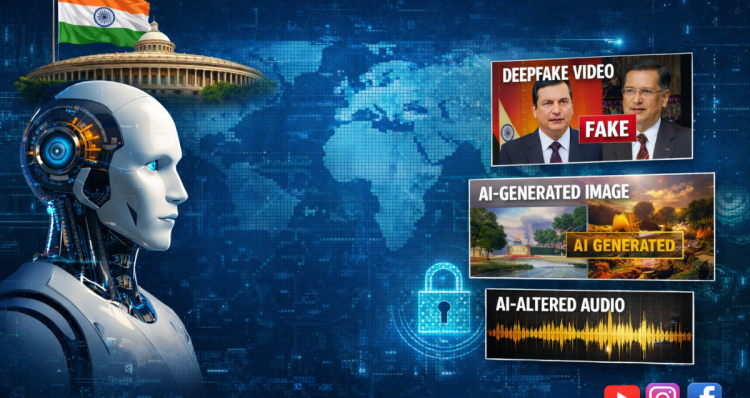

India’s New AI IT Rules 2026: Deepfake & Social Media Changes

Dubai | February 20, 2026 | 0 | India , newsIndia’s government has officially enforced new IT rules for AI-generated content starting today. These updated regulations fall under the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021, amended in February 2026.

The goal is clear: improve digital transparency, reduce the spread of deepfakes, and make online platforms more accountable for artificial intelligence (AI) content.

Here’s a simple explanation of what these new IT rules mean and how they affect social media users and tech companies.

What Are the New IT Rules About?

The Ministry of Electronics and Information Technology has introduced a formal legal framework to regulate AI-generated content in India for the first time.

Under the new rules:

-

Social media platforms must clearly label AI-generated content.

-

Platforms must embed permanent metadata and unique identifiers in such content.

-

These identifiers cannot be removed, hidden, or altered.

-

Companies must use automated tools to detect and block illegal AI content.

-

Response timelines for government orders have been significantly reduced.

This marks a major step in India’s efforts to regulate artificial intelligence, deepfakes, and synthetic media.

What Is “Synthetically Generated” Content?

The government has now formally defined “synthetically generated information.”

It refers to:

-

Audio, video, or images created or modified using a computer

-

Content that appears real

-

Material that could be mistaken for genuine events or real people

This includes:

-

Deepfake videos

-

AI-generated voice clips

-

Face-swapped images

-

AI-created visuals of real individuals in fictional situations

What Is Not Included?

Not all digital editing falls under this rule. The following are exempt:

-

Colour correction

-

Noise reduction

-

File compression

-

Text translation

-

Accessibility improvements

-

Conceptual or illustrative content in research papers, documents, and presentations

For example, using an AI image in a PowerPoint presentation for illustration is allowed. However, creating a fake video of a public figure saying something they never said would qualify as synthetically generated content and must be labeled.

What Do These Rules Mean for Social Media Users?

If you use platforms like Instagram, YouTube, or Facebook, you will notice some changes.

1. Clear Labels on AI Content

Any AI-generated post, reel, video, or audio clip must now carry a visible label before users can like, share, or forward it.

The labeling must be obvious and not hidden in fine print.

2. Mandatory Disclosure During Upload

When uploading content, users may be asked whether the content was created or modified using AI.

Providing false information may lead to:

-

Account penalties

-

Legal consequences under applicable Indian laws

Platforms must remind users of this requirement at least once every three months.

3. Permanent Digital Markers

Platforms must embed:

-

Metadata

-

Unique digital identifiers

These markers help trace content back to its origin. Importantly, these markers cannot be deleted or modified, even if the file is downloaded and re-uploaded.

This closes earlier loopholes where AI-generated content could lose its identification after being reposted.

New Responsibilities for Social Media Platforms

Large platforms such as Instagram, YouTube, and Facebook now have stricter obligations.

Automated Verification

Before publishing content, platforms must:

-

Ask users if the content is AI-generated

-

Use automated systems to verify that declaration

If a platform knowingly allows unmarked AI-generated content, it may lose its safe harbour protection, meaning it could be legally liable for the content.

Faster Government Compliance

Response timelines have been significantly shortened:

-

Some government takedown orders must now be acted upon within three hours (previously 36 hours).

-

Other deadlines have been reduced from 15 days to seven days.

-

Certain actions must now be taken within 12 hours instead of 24.

This reflects the government’s push for quicker control of harmful digital content.

What Type of AI Content Will Be Blocked?

Platforms are required to actively detect and block AI-generated material that violates Indian law, including:

-

Child sexual abuse material

-

Obscene content

-

Fake electronic records

-

Content related to weapons or explosives

-

Deepfakes designed to mislead or impersonate real individuals

Automated tools must be used to prevent the circulation of such content.

Legal Updates Under the New Framework

The rules also update legal references, replacing mentions of the Indian Penal Code with the Bharatiya Nyaya Sanhita, 2023.

The draft version of these amendments was released in October 2025, and platforms were given time until February 20, 2026, to comply with the final notification.

Why These AI Rules Matter

India’s new IT regulations mark a major shift in how AI-generated content, deepfakes, and synthetic media are handled online.

Key impacts include:

-

Increased transparency in digital content

-

Greater platform accountability

-

Stronger protection against misinformation

-

Improved tracing of harmful AI content

-

Faster government response to violations

For users, this means greater clarity about what is real and what is AI-created. For platforms, it introduces stricter compliance requirements and higher legal responsibility.

Final Thoughts

The new IT rules on AI-generated content represent a significant development in India’s digital policy landscape. By making labeling mandatory and traceability compulsory, the government aims to create a safer and more transparent online environment.

As artificial intelligence becomes more advanced, these regulations are likely to shape how digital platforms operate and how users interact with content in the future.

Understanding these changes will help both creators and consumers stay compliant and informed in the evolving world of AI and social media regulation.